Advanced memory

Classifying Cache Misses

Three major categories of cache misses:

- Compulsory Misses: cold start misses or first reference misses. The first access to a block is not in the cache, so the block must be loaded in the cache from the MM. These are compulsory misses even in an infinite cache.

- Capacity Misses: can be reduced by increasing cache size. If the cache cannot contain all the blocks needed during execution of a program, capacity misses will occur due to blocks being replaced and later retrieved.

- Conflict Misses: can be reduced by increasing cache size and/or associativity. If the block-placement strategy is set associative or direct mapped, conflict misses will occur because a block can be replaced and later retrieved when other blocks map to the same location in the cache.

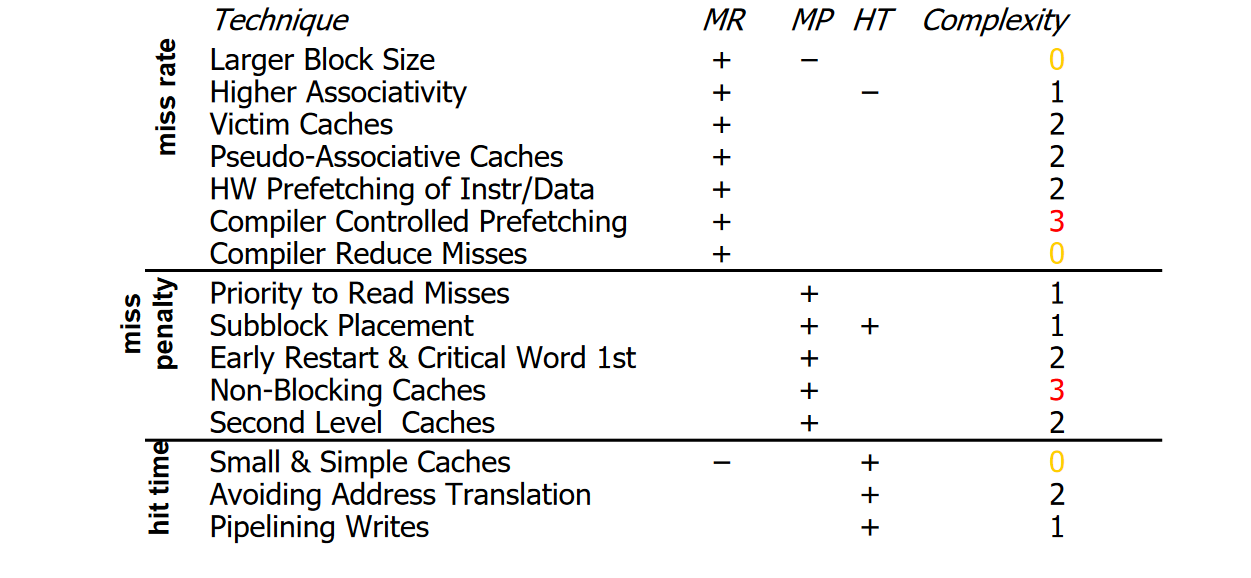

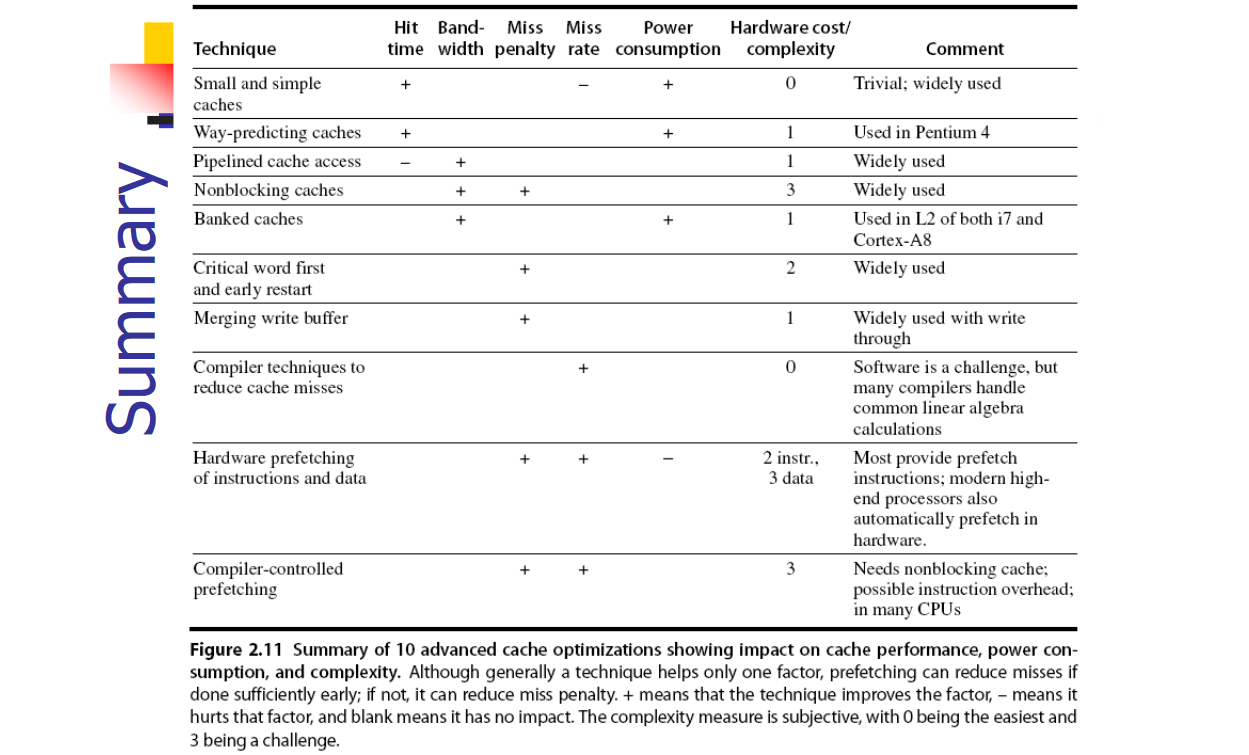

Improving cache performance

$$\text{AMAT} = \text{Hit Time} + \text{Miss Rate} \times \text{Miss Penalty}$$

In order to improve the AMAT we need to:

- Reduce the miss rate

- Reduce the miss penalty

- Reduce the hit time

The overall goal is to balance fast hits and few misses.

1) Reducting the miss rate

1.1) Reducing Misses via Larger Cache Size

Obvious way to reduce capacity misses: to increase the cache capacity.

Drawbacks: Increases hit time, area, power consumption and cost

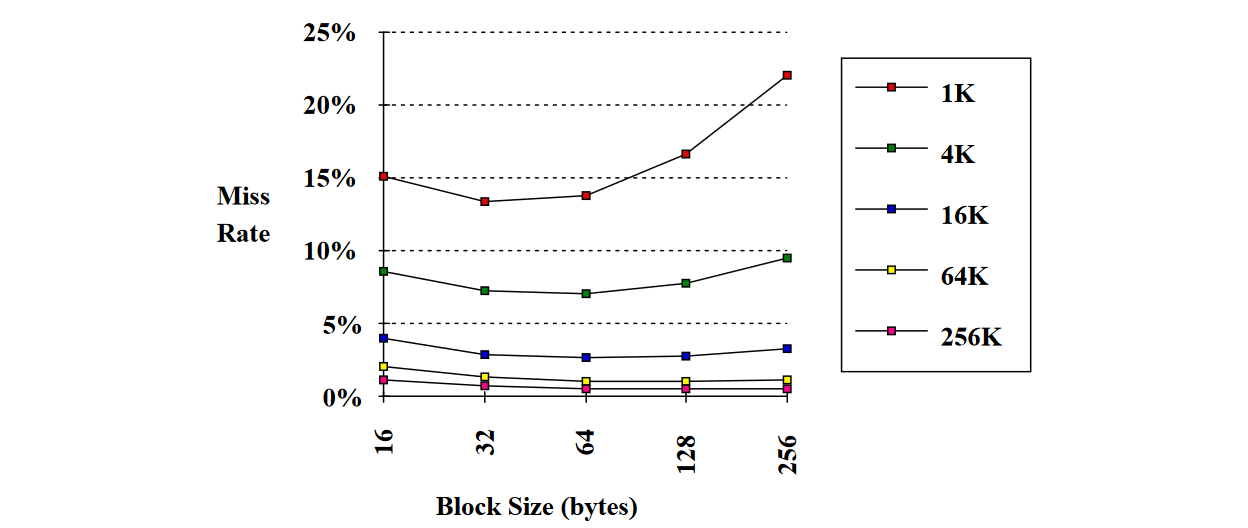

1.2) Reducing Misses via Larger Block Size

Increasing the block size reduces the miss rate up to a point when the block size is too large with respect to the cache size.

Larger block size will reduce compulsory misses taking advantage of spatial locality.

Drawbacks:

- Larger blocks increase miss penalty

- Larger blocks reduce the number of blocks so increase conflict misses (and even capacity misses) if the cache is small.

1.3) Reducing Misses via Higher Associativity

Higher associativity decreases the conflict misses.

Drawbacks: The complexity increases hit time, area, power consumption and cost.

A rule of thumb called "2:1 cache rule": Miss Rate Cache Size N = Miss Rate 2-way Cache Size N/2

Multibanked Caches

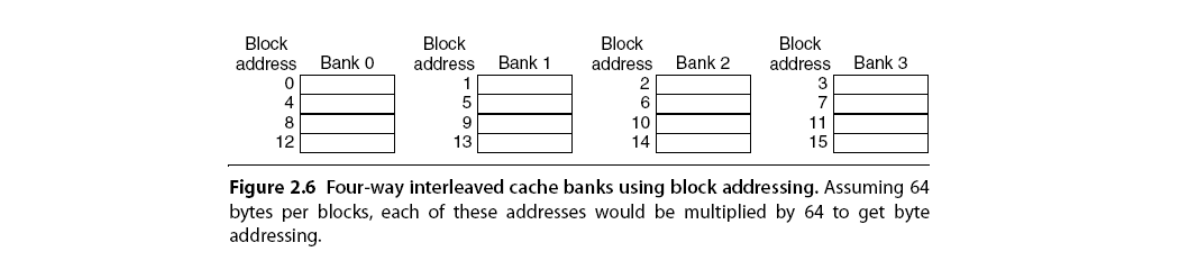

Multibanked caches introduce a sort of associativity. Organize cache as independent banks to support simultaneous access to increase the cache bandwidth.

Interleave banks according to block address to access each bank in turn (sequential interleaving):

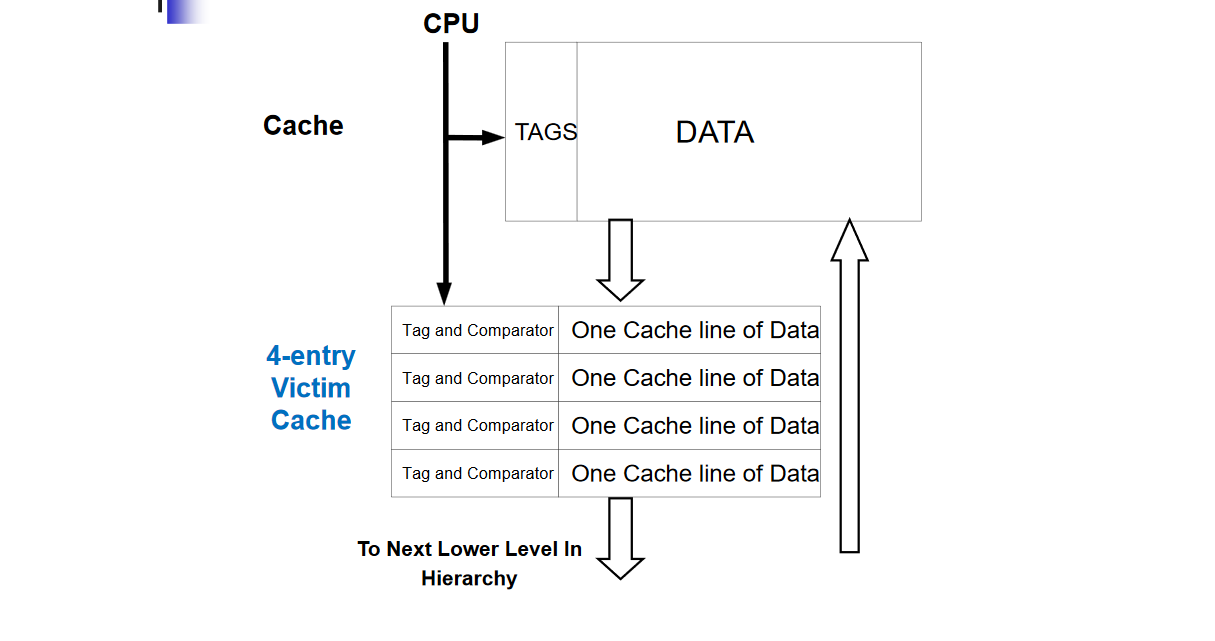

1.4) Reduce Misses (and Miss Penalty) via a “Victim Cache”

Victim cache is a small fully associative cache used as a buffer to place data discarded from cache to better exploit temporal locality. It is placed between cache and its refilling path towards the next lower-level in the hierarchy and it is checked on a miss to see if it has the required data before going to lower-level memory.

If the block is found in Victim cache the victim block and the cache block are swapped.